BEDLAM: A Synthetic Dataset of

Bodies Exhibiting Detailed Lifelike Animated Motion

Michael J. Black Priyanka Patel Joachim Tesch Jinlong Yang

(The authors contributed equally and are listed alphabetically)

Paper Code (Train) Code (Render) Video Data License Contact

Abstract

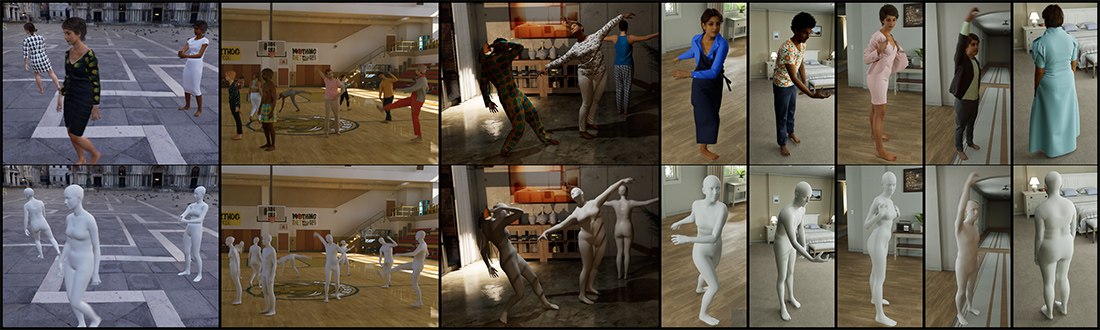

We show, for the first time, that neural networks trained only on synthetic data achieve state-of-the-art accuracy on the problem of 3D human pose and shape (HPS) estimation from real images. Previous synthetic datasets have been small, unrealistic, or lacked realistic clothing. Achieving sufficient realism is non-trivial and we show how to do this for full bodies in motion. Specifically, our BEDLAM dataset contains monocular RGB videos with ground-truth 3D bodies in SMPL-X format. It includes a diversity of body shapes, motions, skin tones, hair, and clothing. The clothing is realistically simulated on the moving bodies using commercial clothing physics simulation. We render varying numbers of people in realistic scenes with varied lighting and camera motions. We then train various HPS regressors using BEDLAM and achieve state-of-the-art accuracy on real-image benchmarks despite training with synthetic data. We use BEDLAM to gain insights into what model design choices are important for accuracy. With good synthetic training data, we find that a basic method like HMR approaches the accuracy of the current SOTA method (CLIFF). BEDLAM is useful for a variety of tasks and all images, ground truth bodies, 3D clothing, support code, and more are available for research purposes. Additionally, we provide detailed information about our synthetic data generation pipeline, enabling others to generate their own datasets.

News

- 2023-10-02: BEDLAM evaluation server is up

- 2023-07-04: Converted SMPL ground truth released

- 2023-03-21: BEDLAM paper selected as highlight at CVPR 2023

Video

Dataset

- To access the dataset please register at this website by using the "Sign In" button at the top right. This process requires you to read and accept the license agreement in order to get access to the BEDLAM dataset. Note that the download links will work only from this website. Also redistribution of downloaded data is not permitted. Once you are signed in you can access the Download area via the top menu link.

- The following synthetic image assets are available for download:

- 10450 image sequences, 30fps, 1280x720

- images (PNG)

- depth maps (EXR/32-bit)

- segmentation masks (PNG)

- separate binary masks for subject body/clothing/hair and environment

- movies (MP4)

- ground truth for all sequences (CSV)

- The following body and clothing related assets are available for download:

- body textures and clothing overlay textures

- clothing assets

- SMPL-X animation files

- We also released the original 6fps image dataset which was subsampled from the 30fps source data and allows you to replicate the experiments in the paper.

Code

- Training and Evaluation code

- Render pipeline code

- Information and scripts for render data preparation (SMPL-X, Blender) and rendering (Unreal)

- Clothing processing code

Acknowledgements and Disclosure

We thank Giorgio Becherini for help with the clothing simulation and the clothing dataset release.

We thank STUDIO LUPAS GbR for creating the 3D clothing, WowPatterns and Publicdomainvectors.org for additional pattern designs, Meshcapade GmbH for the skin textures, Lea Müller for help removing self-intersections in high-BMI bodies and Timo Bolkart for aligning SMPL-X to the Character Creator template mesh. We thank Tsvetelina Alexiadis, Leyre Sánchez, Camilo Mendoza, Mustafa Ekinci and Yasemin Fincan for help with clothing texture generation. We thank Neelay Shah and Software Workshop for helping with Evaluation server deployment.

We thank Benjamin Pellkofer for excellent IT support.

Blender (SMPL-X render data preparation) and Unreal Engine (rendering) were invaluable tools in the image dataset generation pipeline and we deeply thank all the respective developers for their contributions.

Michael J. Black (MJB) has received research gift funds from Adobe, Intel, Nvidia, Meta/Facebook, and Amazon. MJB has financial interests in Amazon, Datagen Technologies, and Meshcapade GmbH. While MJB is a consultant for Meshcapade, his research in this project was performed solely at, and funded solely by, the Max Planck Society.

Citation

@inproceedings{Black_CVPR_2023,

title = {{BEDLAM}: A Synthetic Dataset of Bodies Exhibiting Detailed Lifelike Animated Motion},

author = {Black, Michael J. and Patel, Priyanka and Tesch, Joachim and Yang, Jinlong},

booktitle = {Proceedings IEEE/CVF Conf.~on Computer Vision and Pattern Recognition (CVPR)},

pages = {8726-8737},

month = jun,

year = {2023},

month_numeric = {6}

}Contact

- Questions: bedlam@tue.mpg.de

- Commercial licensing: ps-licensing@tue.mpg.de